The @justsaysinmice problem goes far deeper than bad science reporting

The @justsaysinmice problem goes far deeper than bad science reporting

We are delighted to feature this guest blog on mice models in animal research from Nicole C. Nelson. Nicole is a professor in the School of Medicine and Public Health at the University of Wisconsin — Madison, and the author of the 2018 book Model Behavior published by The University of Chicago Press

In the past month, the Twitter account @justsaysinmice has gained over fifty thousand followers by doing exactly what its name promises — tweeting “IN MICE” in response to current science news headlines. The account is the science nerd equivalent of adding “in bed” to the end of any sentence for a laugh: “Low-protein, high carb diet could help stave off dementia … in mice.” “Ketamine may relive depression by repairing damaged brain circuits … in mice.” You get the idea. The tweets are amusing and point to a real issue in science: Over-hyped statements that make scientific findings look closer to clinical application than they actually are. But the account attributes that problem to the wrong source. It’s not just that science journalists don’t know how to talk about science; scientists themselves don’t know either.

In a recent article on Medium, scientist James Heathers blames the sensationalized news headlines that he critiques with his @justsayinmice account on sloppy science news reporting. What really “grinds his gears,” Heathers writes, is when journalists report on animal research in a way that makes it seem as though the findings are directly relevant to humans. Eliding that difference, he writes, “is like pointing at a pile of two-by-fours and a bag of tenpenny nails and calling it a cottage.”

Heathers is right to call out some particularly egregious reporting tactics that seem designed to mislead, such as headlines that talk about people when the article actually describes animal research. But the problem goes far deeper than bad science reporting. As an ethnographer who spent years in mouse behavior genetics laboratories, I have watched scientists themselves struggle to find ways of talking about the relationship between mice and humans in their own experiments.

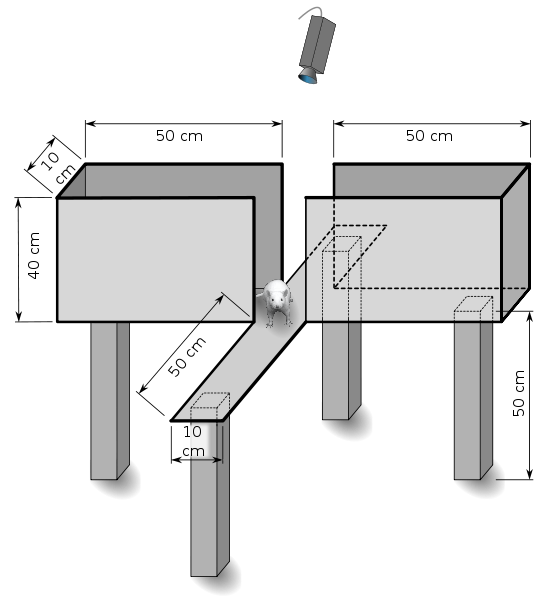

Take for example the elevated plus maze (above), a behavioral test for mice widely used in developing new drugs to treat anxiety. The test uses a simple maze in the shape of a plus sign. Two arms of the plus sign are enclosed by high walls to create narrow corridors, and the other two are open ledges. Scientists place a mouse at the center of the maze and then measure how much time it spends in the “open” versus the “closed” arms. The more time a mouse spends in the open areas of the maze, the less anxious it is said to be.

The scientists I studied were painfully aware that a mouse who refused to venture out into the open arms of a plastic maze was not directly comparable to a person suffering from an anxiety disorder. They were quick to point out that we have no way of knowing what a mouse in that situation was feeling, and that there was plenty of experimental evidence to suggest that the test captured some facets of human anxiety but missed others.

The verbal shorthand that they used to convey the gap between mouse and human was to refer to the maze as a “test of anxiety-like behavior.” The “anxiety-like” was critical for these scientists — it reminded them that what they were studying was not anxiety, but something like it. Exactly how their animal models were unlike human anxiety was not yet fully known, and so the phrase remained vague. These scientists had similarly cumbersome ways of speaking about “tests of depression-related phenotypes” or “behaviors relevant to schizophrenia.” They used these phrases almost ritualistically, even in casual conversations and in the private spaces of the laboratory. Newcomers to the laboratory, such as myself, were speedily corrected when they slipped into the simplification of referring to the elevated plus maze as a “test of anxiety.”

A human version of the elevated plus maze is available to view on YouTube. Note the description of the maze as a tool for studying “human anxiety-related behavior.”

This vocabulary for communicating uncertainty worked well in groups of like-minded behavioral scientists, but fell apart quickly outside of it. Even fellow scientists from other disciplines were confused about why their seemingly pedantic colleagues insisted on calling mouse behavior “anxiety-like.” The situation was even worse when I watched scientists talking about their research to journalists, who saw the scientists’ cautionary phrases as mere jargon.

My research in animal behavior genetics laboratories led me to conclude that scientists need new, more sophisticated vocabularies for expressing degrees of certainty and uncertainty in their research. Vague terms such as “anxiety-like” are very much like the “weasel words” that science journalists get critiqued for using — Ketamine may relieve depression, low-protein diets could help stave off dementia. Both types of phrases give little information about how much distance there is between mouse and human, or how much confidence readers should place in these results.

The problem of how to talk about uncertainty in science is one that’s getting worse, not better. In recent years, the vocabulary that many scientists use to express how confident they are in their findings — significance testing and p-values — have come under attack. Critics point to important problems with how p-values are used in science today, but the fact remains that p-values are one of the few shared vocabularies that scientists have for expressing degrees of confidence in their findings.

It is easy to blame bad reporting for making scientific findings seem more certain and relevant than they actually are, but this ignores the imprecision in how scientists themselves talk about translational research. Heathers claims that adding “in mice” to science news headlines is an easy “fix” to the problem of animal research being taken out of context. This move fixes one problem, but leaves a deeper one unresolved. Even when a headline clearly states that the original study was done in mice, one person’s impression of how close that study is to clinical application is likely to be very different another person’s. We need better scientific vocabularies that will help readers calibrate their sense of certainty. Solving that problem must begin with scientists, not science journalists.